Many schools have an honor code system, which prevents very often the cheating during exams and tests among students. Students simply do not show their exams and tests to other students who are trying to cheat. One reason why students do not let to cheat can be the consequences of violating the honor code, which can be very harsh and result often to the exclusion from the school. Another reason is definitively the mindset of many students about this topic. Their opinion about the purpose of exams and tests is just to proof the knowledge and to get recognition from the professor in form of a grade. In my experience both are the main reasons why take home exams (even closed book) can work very well in schools having the honor code system.

Other schools do not have such an honor code system. The consequences of cheating during tests and exams is much less drastic. Being caught while cheating will just lead to the exclusion from the exam, which can be repeated the next semester. In some cases it will just lead to downgrade the grade. The mindset of some (but not all) students is very different, as well. Cheating is widely considered as helping.

On the one side the none existence of the honor code system makes the use of take home exams very difficult. On the other side, take home exams do have advantages for both, the students and the professors. Students can proof their knowledge not only in 1.5 hours but can take their time for one day, or a week. So the quality of the turned in exams is in general much better. Still, there is no way to prevent, that students exchange information with each other. Which is actually not necessarily a bad thing, because in real life this is just the usual case. So what we do is to accept the exchange of information, but we do not accept the copying of sentences and text paragraphs or modifying them. However, if we have 60 exams with 20 pages each, there is almost no way to control copying. Unless we use electronic help.

The Idea: Using an overfitted neural network

In our classes, students need to turn in the take home exams not only in paper form, but also in electronic form, such as a PDF files. The application we wrote reads in the PDF files and stores its sentences in a list which represents the training data. The training data is then fed into a neural network for training. In general neural networks are used for prediction. A commonly used example are cinema reviews. The application feeds the trained neural network with a cinema reviews and the neural network categorizes them as a positive or as a negative review. This is a prediction use case. Overfitting during neural network training is here not the right thing to do. In our case, we do not want prediction. If we feed a sentence to the trained neural network, we want to know to which student the sentences belongs to. So what we need is a neural network, which learned the sentences and categorizes them to students to which they belong to. Learning of sentences can be done with overfitting.

Loading in the training data

Students have to turn in the exams as PDF files. PDF files in general do not have the right format to be processed by an application. Like so often in data science, we need to bring the files into a form which can be handled with a neural network. Unfortunately this can be a very tedious work. Fortunately there is a Linux program called pdftotext which converts PDF files into text. So first thing is to convert all turned in PDF files and the PDF file of the assignment itself into text files.

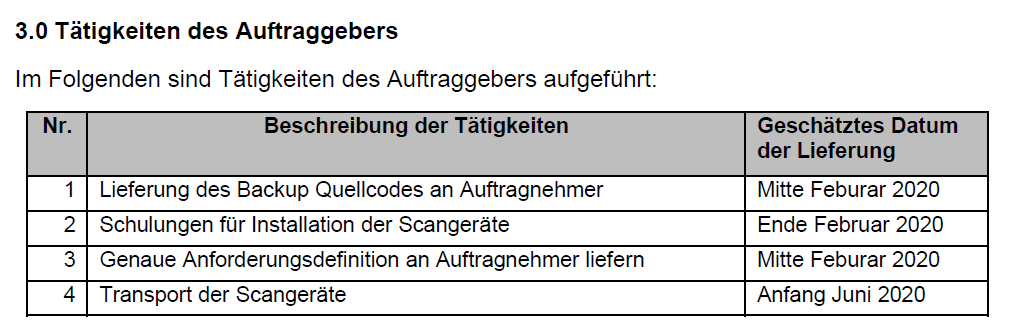

Here comes the problem, for which I do not have a good solution yet. Figure 1 shows a table of a PDF file. This is a table of a turned in take home exam.

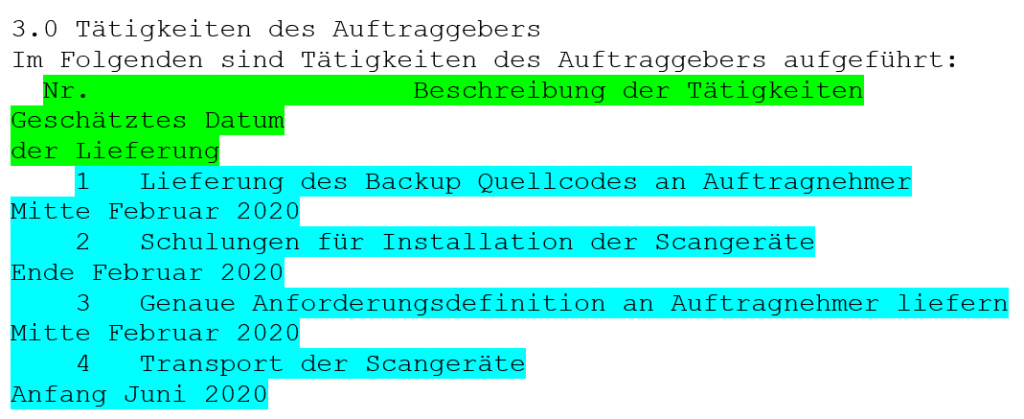

The program pdftotext converts very well PDF text passage into a text file, but the text of PDF tables is aligned in a way, which is difficult to parse, because we do not know to which column a sentence belongs to, see the Figure 2. You can see that here are three columns descriptions: “Nr.”, “Beschreibung der Tätigkeiten” and “Geschätztes Datum der Lieferung”. The column descriptions are just written into one line (see green line). The same is true for the following table rows (see turquoise lines), which are just written into one line, as well.

Ideally the text files should list the sentences one after the other, separated by a carriage return. However pdftotext does not do this, especially with tables. Before writing an application to convert the pdftotext generated text file into the needed format, we decided to do this step manually. It is left for future to write such an application.

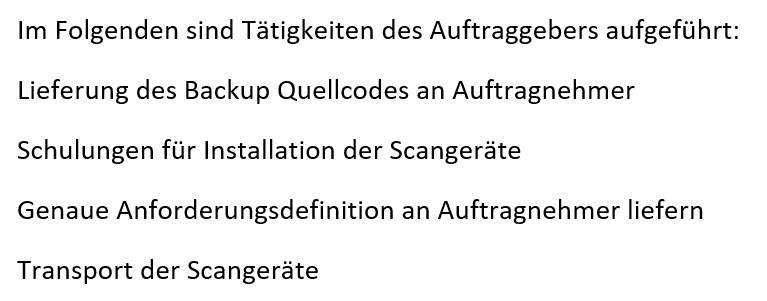

We tediously edited the pdftotext generated text files of each take home exam in a way, that all sentences are listed one after the other, see Figure 3. Actually, this doing this work has the advantage, that we get an impression about the turned in take home exams before actually correcting and grading them. So editing and correcting can be done in parallel.

The following source code loads a text file and its sentences into the list onedoc, and then appends it to the list documents. So all sentences in the list documents can addressed with two indices representing the text file number and the sentence number. During this process each sentence is stripped off from special characters, non-ascii characters and digits. All character are converted to lower case.

def remove_non_ascii(text):

return ''.join([i if ord(i) < 128 else ' ' for i in text])

def remove_digit(text):

return ''.join([i if not i.isdigit() else ' ' for i in text])

pathname='/home/inf/Daten/CPCHECK/WS1920/train'

sentences = []

documents = []

categories = []

numbertodoc = {}

maxlength = 0

documentnumber=0

for f in os.listdir(pathname):

if f.endswith('.txt'):

name = os.path.join(pathname,f)

onedoc = []

with open(name) as fp:

line = fp.readline()

cnt = 1

while line:

line = line.strip()

if len(line) != 0:

line = line.lower()

line = line.replace('\\', ' ').replace('/', ' ').replace('|', ' ').replace('_', ' ')

line = line.replace('ä', 'ae').replace('ü', 'ue').replace('ö', 'oe').replace('ß', 'ss')

line = line.replace('+', ' ').replace('-', ' ').replace('*', ' ').replace('#', ' ')

line = line.replace('\"', ' ').replace('§', ' ').replace('$', ' ').replace('%', ' ').replace('&', ' ')

line = line.replace('(', ' ').replace(')', ' ').replace('{', ' ').replace('}', ' ')

line = line.replace('[', ' ').replace(']', ' ').replace('=', ' ').replace('<', ' ').replace('>', ' ')

line = line.replace('i. h. v.', 'ihv').replace('u. u.', 'uu').replace('u.u.', 'uu')

line = line.replace('z. b.', 'zb').replace('z.b.', 'zb')

line = line.replace('d. h.', 'dh').replace('d.h.', 'dh').replace('d.h', 'dh')

line = line.replace('o.ae.', 'oae').replace('o. ae.', 'oae')

line = line.replace('u.a.', 'ua').replace('u. a.', 'ua')

line = line.replace('ggfs', 'ggf')

line = remove_non_ascii(line)

line = remove_digit(line)

line = line.replace('.', ' ').replace(',', ' ').replace('!', ' ').replace('?', ' ').replace(':', ' ').replace(';', ' ')

sentences.append(line.split())

onedoc.append(line.split())

if len(sentences[-1]) > maxlength:

maxlength = len(sentences[-1])

cnt += 1

line = fp.readline()

documents.append(onedoc)

numbertodoc[documentnumber] = os.path.basename(name)

documentnumber += 1

for catnum in range(documentnumber):

category = [0.0] * documentnumber

category[catnum] = 1.0

categories.append(category)

The list categories in the source code above consists of a list of vectors. The vector’s length is the number of take home exams. Each vector categorizes the owner of the take home exam with the position of the element having a 1.0. E.g. the first element of the vector is 1.0 and the remaining elements are 0.0, and the vector points to the first take home exam owner. These vectors are needed as input to the neural network to categorize each sentence of one take home exam.

A third list called sentences is needed later to create a vocabulary and to embed the words. All sentences of all take home exams are appended into this list.

Creating a vocabulary and embedding the words

Let us take a look at the sentence from above: ” Im Folgenden sind Tätigkeiten des Auftraggebers aufgeführt “. It is a German sentence which makes sense (the meaning is completely irrelevant). The words of the sentence make sense because the words can be seen in a context. There are existing libraries which can group words used in a context (or sentence) with vectors. So each word can be represented by a n-dimensional vector and all the vectors of one sentences can be grouped by pointing them into similar directions. Same is true for all sentences of each take home exam. Vectors can be appointed to each word. All words used in a similar context point into similar directions. This is also called word embedding. The code below generates the vocabulary and embeds all words inside the list sentences. Word2Vec from the gensim library assigns each word a 50-dimensional vector and returns an Word2Vec model:

EMBEDDED_DIM = 50 model = Word2Vec(sentences, min_count=1, size=EMBEDDED_DIM)

The code below shows how each word from the Word2Vec model vocabulary is assigned to two dictionaries. The method keys is returning the list of words of the created vocabulary. In tokendict each word from the model is assigned a value. Correspondingly, in worddict each value is assigned to a word from the model, so now we have a word-value and a value-word mapping. Conversions in both directions are needed because a neural network must be fed with numbers and not with words.

tokendict = {}

tokendict['noword']=0

worddict = {}

worddict[0]='noword'

i=1

for key in model.wv.vocab.keys():

tokendict[key]=i

worddict[i]=key

i+=1

Creating the training data set

For training we need both the sentences of the take home exams and the categories as vectors. The category vectors assign an owner to each sentence. The sentences cannot be fed with words into the neuronal network, so we need to convert the words into numbers. The assignments of words to values have already been done above (tokendict and worddict). Sentences differ in lengths. But neural networks need fixed size data as input. We can assume that the maximum length of a sentence is less that 100 words. This can also be verified very easily during the data loading. So the words inside the documents array are assigned to values (using tokendict and worddict) and appended to a list x_train. x_train is set to a fixed size (in this case 100). All elements of x_train exceeding the size of the sentence are padded to 0 (which has the ‘noword’ assignment). The keras method pad_sequences is exactly doing this. Below the code creates training data.

x_train = []

y_train = []

for i in range(0, documentnumber):

document = documents[i]

for sent in document:

tokensent = []

for word in sent:

tokensent.append(tokendict[word])

x_train.append(tokensent)

y_train.append(categories[i])

print(len(y_train))

x_train = pad_sequences(x_train, padding='post', maxlen=100)

Each sentence needs to be categorized to one take home exam owner. For this we assign the category vectors to the y_train list.

Compiling and training the model

All word vectors from the Word2Vec model have to be moved into a data structure which we call embedded_matrix. embedded_matrix is a two dimensional array with the size of the number of vocabulary and the size of the dimension of the word vectors (which is 50). The copying code is shown below:

embedding_matrix = np.zeros((len(model.wv.vocab)+1, EMBEDDED_DIM))

for i in range(1,len(model.wv.vocab)+1):

embedding_matrix[i]=model[worddict[i]]

We added a one to the size of the vocabulary (first line in code above), because we count additionally the word ‘noword’. The embedded_matrix can be represented as a layer of the neural network, with the element values of the word vectors as their weights. Keras provides a method Embedding to incorporate the embedding_matrix. See the next source code compiling the model:

modelnn = Sequential()

modelnn.add(layers.Embedding(len(model.wv.vocab)+1, EMBEDDED_DIM, weights=[embedding_matrix], input_length=100, trainable=True))

modelnn.add(layers.GlobalMaxPool1D())

modelnn.add(layers.Dense(100, activation='relu'))

modelnn.add(layers.Dense(100, activation='relu'))

modelnn.add(layers.Dense(documentnumber, activation='softmax'))

modelnn.compile(optimizer='adam',

loss='categorical_crossentropy',

metrics=['accuracy'])

modelnn.summary()

Note that the parameter trainable is set to True, because we want that the word vector elements can change during the training. We added two additional Layers with 100 neurons to the model. The last layer has exactly the number of take home exam participants (documentnumber). We chose softmax as an activation function. Therefor, if you count all output value of the last layer, it should result to one (which is not necessarily true for the sigmoid activation function). You can consider the output value as a probability that a sentence belongs to an owner. We chose the categorical cross entropy as a loss function, because we expect that one sentence can have several take home exam owners. This is exactly, what we want to figure out! The training is started with the following code:

y_train = np.array(y_train, dtype=np.float32) modelnn.fit(x_train, y_train, epochs=40, batch_size=20)

The training takes about ten minutes on a NVIDIA 2070 graphics card. The accuracy is about 93%. We do not care about the validation_accuracy, because we do not bother about validating the model. We do want overfitting, because we are not predicting here anything. We simply want to know who are the owners of a input sentence.

Who copied from whom?

After training we can use the predict method of the neural network model with sentences from the documents list. These are same sentences we used for training. Actually we are not predicting anything here, even if we use the predict method. The purpose is to receive an output vector, having the size of number of participants, with probabilities in its elements. If the probabilities exceed a threshold, than there is a high chance, the sentences belongs to the owner identified by its index. Of course there can be several owners of one sentence. Below are some helper functions to print out the documents having similarities.

def sentencesFromDocument(number):

assert(number < documentnumber)

sentences = []

for sent in documents[number]:

tokensent = []

for word in sent:

tokensent.append(tokendict[word])

sentences.append(tokensent)

return pad_sequences(sentences, padding='post', maxlen=100)

def probabilityVector(sentences):

y_sent = [0]*documentnumber

for sent in sentences:

x_pred=[]

x_pred.append(sent)

x_pred = np.array(x_pred, dtype=np.int32)

y_sent += modelnn.predict(x_pred)

return y_sent

def similardocs(number, myRoundedList, thresh):

cats = myRoundedList[0]

str=""

once = False

for i in range(len(cats)):

if cats[i] >= thresh:

if i != number and once == False:

once = True

if i != number and once == True:

str+="\n {}: Doc: {} Sim: {}".format(i, numbertodoc[i], cats[i])

return str

The function sentencesFromDocument is returning a list of sentences in form of vectors with key values from a specified (number) document. All vectors have the size 100. 100 is the maximum size of one sentence.

The function probabilityVector is returning a vector with elements representing the probability of owning a sentence. The sentence is the input parameter. The input parameter is the sentence to be fed into the predict method.

The function similardocs prints out all documents having high probabilities with same sentence. They need to exceed a threshold thresh which is delivered as a parameter.

Below the source code, which is calling the helper functions above with each document.

for i in range(documentnumber):

mylist = probabilityVector(sentencesFromDocument(i))

myRoundedList = list(np.around(np.array(mylist),decimals=0))

print("{}: {} ".format(i, numbertodoc[i])+similardocs(i, myRoundedList,9))

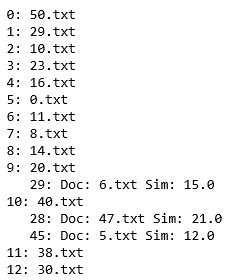

The output of the code can be seen it Figure 3. At row “9:” there seems to be a hit, meaning that file 20.txt and file 6.txt have similar sentences. At row “10:” it seems that the owner of file 40.txt, 47.txt and 5.txt worked very well together.

Conclusion

The program worked very well in finding similarities in sentences between the take home exam owners. However I do not really trust the output so I cross check the real exams. Figure 4 shows one exam having similar or identical sentences with a second exam from another participant. All sentences which are similar or identical are marked.

Using the cheat check definitively gives a good pointer to exam owners who turned in similar sentences. So the assumption that the participants worked together is not far fetched.

One problem which still needs to be solved is the structure of text processed by the program pdftotext. Currently we need to put the text manually in order which is quite cumbersome. In future we need a tool which is doing this automatically.